- ChatGPT Toolbox's Newsletter

- Posts

- AI Pulse

AI Pulse

🤫 AI Pulse - the AI insight everyone will be talking about (you get it first).

Find out why 1M+ professionals read Superhuman AI daily.

In 2 years you will be working for AI

Or an AI will be working for you

Here's how you can future-proof yourself:

Join the Superhuman AI newsletter – read by 1M+ people at top companies

Master AI tools, tutorials, and news in just 3 minutes a day

Become 10X more productive using AI

Join 1,000,000+ pros at companies like Google, Meta, and Amazon that are using AI to get ahead.

Nvidia Shocks Market with Surprise ‘Blackwell Ultra’ Chip and Morpheus Robotics Platform

Nvidia Shocks Market with Surprise ‘Blackwell Ultra’ Chip and Morpheus Robotics Platform

In a surprise mid-quarter virtual event on August 6, 2025, Nvidia CEO Jensen Huang unveiled the "Blackwell Ultra," a more powerful iteration of its already dominant Blackwell architecture, and announced Morpheus, a new software and hardware platform aimed at accelerating the deployment of general-purpose robotics. The announcements, made from a virtual stage, sent ripples through the tech industry, signaling Nvidia's aggressive strategy to not only lead in AI training and inference but to build the foundational stack for the next wave of embodied AI. The move is seen as a direct response to growing competition in the custom silicon space and a forward-looking bet that robotics, not just data centers, will drive the next trillion dollars of value in the AI era.

The Blackwell Ultra is not merely an incremental update; it represents a significant leap in performance and efficiency, even over the B200 GPU that began shipping earlier this year. The key innovation lies in its adoption of a 3D chiplet stacking technology, moving beyond the current CoWoS (Chip-on-Wafer-on-Substrate) packaging. By stacking logic and memory dies vertically, Nvidia has drastically reduced the physical distance data must travel, cutting down on latency and energy consumption. The Ultra boasts a staggering 240 gigabytes of HBM3e memory, up from 192GB in the B200, with a memory bandwidth exceeding 6 terabytes per second. Huang claimed the new chip delivers a 30% improvement in training performance for models larger than one trillion parameters and, crucially, a 50% improvement in inference efficiency for real-time applications. This efficiency gain is paramount for the economic viability of deploying powerful generative AI models at scale. During the keynote, Huang presented a benchmark showing a single Blackwell Ultra GPU outperforming a rack of previous-generation H100s on specific structured data tasks, a testament to the architectural enhancements.

Perhaps more groundbreaking is the Morpheus platform. Named after the Greek god of dreams, Morpheus is an integrated development environment and hardware reference design intended to be the "CUDA for Robotics." It consists of three core components: the Morpheus SDK, the Jetson Thor robotics controller, and the "Guardian" simulation environment. The SDK provides pre-trained foundation models for common robotics tasks like visual perception, navigation, and manipulation. These models, which Nvidia calls "RoboNets," can be fine-tuned on specific customer data. The Jetson Thor controller is a new system-on-a-chip designed specifically for real-time robotic control loops, integrating a Blackwell Ultra-derived GPU core with a high-performance ARM CPU and dedicated safety processors. Finally, the Guardian simulation environment, powered by Omniverse, allows developers to train and validate robotic systems in a photorealistic, physically accurate digital twin before deploying them in the real world. This "sim-to-real" pipeline is critical for reducing development costs and ensuring safety.

Expert reaction has been a mix of awe and concern. Dr. Anya Sharma, a robotics professor at Carnegie Mellon University, commented, "Morpheus is an audacious attempt to create a standardized operating system for general-purpose robots. By providing the core perception and control stack, Nvidia is lowering the barrier to entry for countless companies. However, it also risks creating a powerful walled garden, similar to what CUDA did for GPU computing. The industry will need to decide if the convenience outweighs the potential for vendor lock-in." The implications for competitors are stark. Companies like Tesla, which develops its own custom Dojo hardware and FSD software stack, now face a well-funded, generalized platform that could enable other automotive and robotics companies to leapfrog their capabilities. Similarly, chip startups focused on edge inference will have to contend with the immense performance of the new Jetson Thor controller.

The announcement underscores a strategic pivot. While data center sales remain Nvidia's cash cow, the company is clearly anticipating a future where AI computation is not just confined to massive cloud servers but is distributed across billions of intelligent, autonomous devices. By creating the end-to-end platform for this future—from the simulation environment to the robotics chip to the AI models themselves—Nvidia aims to secure its dominance for the next decade. The company is betting that the same developers who learned CUDA for scientific computing and AI will now learn Morpheus for robotics, creating a deep and defensible moat.

The full impact of Blackwell Ultra and Morpheus will unfold over the next few years as the products reach developers and enterprise customers. The immediate next steps will be the release of the Morpheus SDK to early-access partners and the first shipments of Jetson Thor development kits by the end of the year. For the broader AI community, this signals a clear direction: the era of disembodied language models is maturing, and the frontier is now moving into the physical world. Nvidia is not just selling shovels for the AI gold rush; it is now selling the blueprints and engines for the entire robotic workforce.

DeepMind and Isomorphic Labs Unveil Gen-Forge, an AI That Designs Novel Therapeutic Proteins from Scratch

DeepMind and Isomorphic Labs Unveil Gen-Forge, an AI That Designs Novel Therapeutic Proteins from Scratch

Google's AI division, DeepMind, in collaboration with its sister company Isomorphic Labs, has published a landmark paper in Nature detailing "Gen-Forge," a new generative AI model capable of designing entirely novel proteins with specific therapeutic functions. The announcement, made on August 7, 2025, represents a significant leap beyond predicting the structure of existing proteins, as famously achieved by AlphaFold. Gen-Forge instead operates as a "protein inventor," generating atomic-level blueprints for new biologics that do not exist in nature, designed to bind to specific disease targets with high affinity and stability. This breakthrough could dramatically accelerate drug discovery, potentially leading to new treatments for cancers, autoimmune disorders, and genetic diseases.

For decades, drug discovery has been a process of painstaking trial and error, screening vast libraries of existing molecules to find a potential match for a disease target. Gen-Forge flips this paradigm on its head. It starts with the desired function—for example, "inhibit the activity of the KRAS protein, a common cancer driver"—and works backward to generate a de novo protein structure that accomplishes this goal. The model’s architecture is a sophisticated fusion of a diffusion model, similar to those used in image generation AIs like Midjourney, and a graph neural network (GNN) that understands the complex geometry and biochemical properties of amino acid interactions. The diffusion component starts with a random cloud of atoms (a "noise" state) and iteratively refines it into a coherent, folded protein structure, while the GNN acts as a physics-aware guide, ensuring that the generated protein obeys the laws of biochemistry and is likely to be stable and synthesizable.

The training process for Gen-Forge was immense. It was fed not only the entire Protein Data Bank (PDB), containing the structures of hundreds of thousands of known proteins, but also vast datasets on protein-ligand interactions, binding affinities, and kinetic data. A key innovation described in the paper is a technique called "functional conditioning." During training, the model was given not just a protein structure but also a text description of its function (e.g., "[enzyme_class:hydrolase,target:amyloid_beta]"). This allows researchers to prompt the model with a desired function at inference time, directly steering the generation process. The paper presents several compelling examples of Gen-Forge's capabilities. In one digitally simulated experiment, the model was tasked with designing a protein to neutralize a specific viral surface protein. Gen-Forge generated over one hundred novel candidates, and when these were tested in silico, a significant percentage showed higher binding affinity than existing monoclonal antibodies.

In a crucial step towards real-world validation, Isomorphic Labs synthesized several of Gen-Forge's AI-designed proteins in the lab and confirmed that they folded into the exact three-dimensional structures predicted by the model. Furthermore, these lab-created proteins successfully performed their intended biological functions in cell cultures. Demis Hassabis, CEO of both DeepMind and Isomorphic Labs, stated in a press briefing, "With AlphaFold, we learned to read the book of life. With Gen-Forge, we are beginning to write new words in it. This is a pivotal moment for biology and medicine, moving us from a paradigm of discovery to one of design."

The implications are profound. Biopharmaceutical companies could potentially shorten the pre-clinical phase of drug development from years to months. Instead of screening millions of compounds, they could generate a few dozen highly promising, purpose-built protein candidates. This could lead to "programmable medicine," where treatments are rapidly designed to combat new pathogens or target patient-specific cancer mutations. Dr. Harold Varmus, a Nobel laureate and former director of the National Cancer Institute, called the work "a stunning demonstration of AI's potential in biomedicine," but he also urged caution. "The path from a beautifully designed protein in a computer to a safe and effective drug in a human is long and fraught with peril. The human immune system is notoriously unpredictable, and these novel proteins could elicit unexpected responses. The clinical trials will be the ultimate arbiter of success."

Gen-Forge also raises complex questions about intellectual property and access. Who owns the patent to a protein designed by an AI? How can access to this powerful technology be democratized to prevent a handful of large corporations from dominating the future of medicine? These are challenges that regulators, ethicists, and the scientific community will need to address proactively.

The immediate next step for Isomorphic Labs is to use Gen-Forge to build a proprietary pipeline of novel drug candidates for its internal development and to form partnerships with pharmaceutical giants. The release of the paper and the accompanying model details (though not the full code or weights) will catalyze a wave of research worldwide, as academic and commercial labs rush to replicate and build upon these findings. Gen-Forge has not just provided a new tool; it has opened up an entirely new, computationally driven field of protein engineering that may well define the next century of medicine.

Mistral AI Disrupts the Open-Source Landscape Again with ‘Mistral-Next’ 8x22B Mixture-of-Experts Model

Mistral AI Disrupts the Open-Source Landscape Again with ‘Mistral-Next’ 8x22B Mixture-of-Experts Model

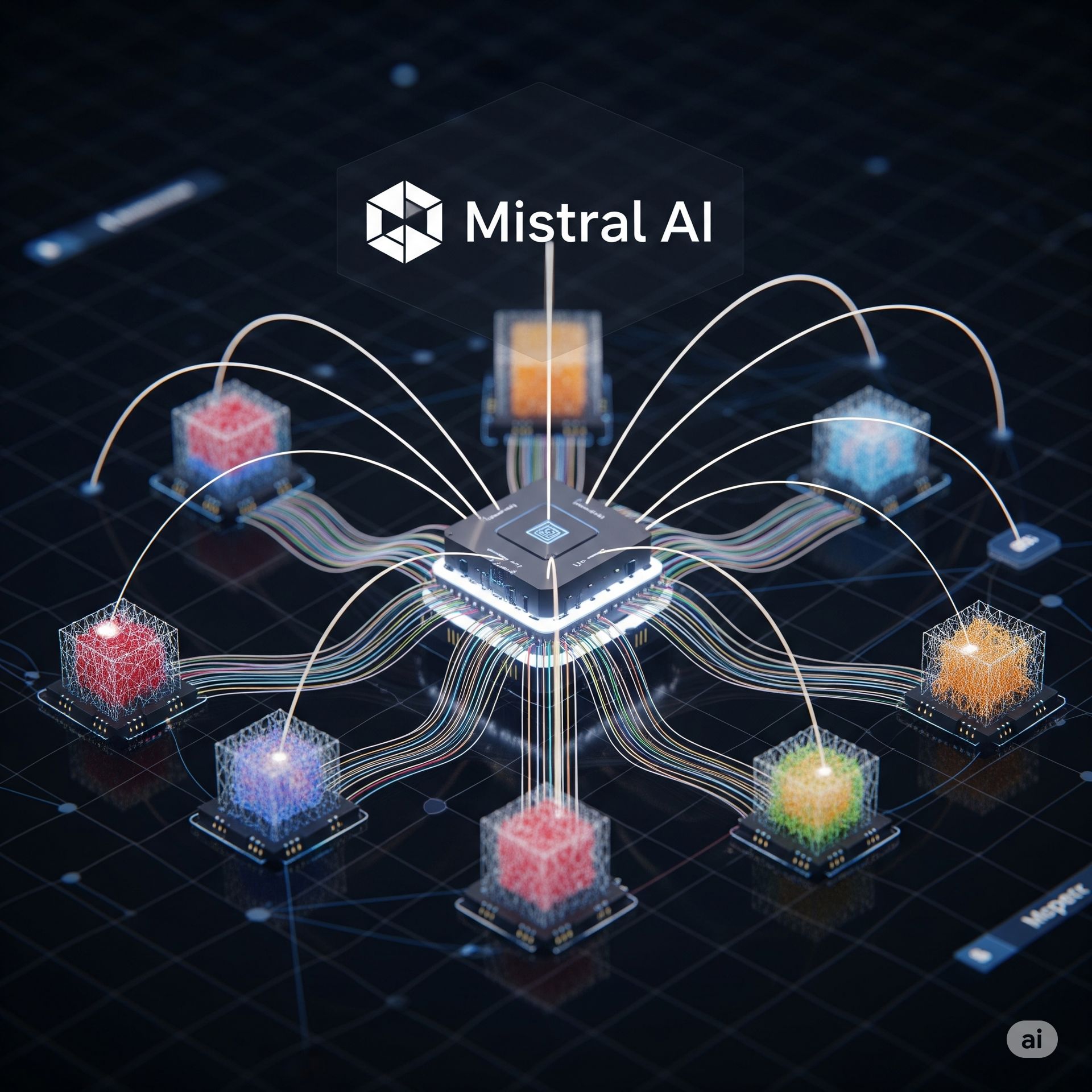

In a move characteristic of its disruptive style, Paris-based AI lab Mistral AI on August 6, 2025, released "Mistral-Next," a powerful new open-source large language model, directly to the community via a torrent link posted on X (formerly Twitter). The model, an 8x22B Mixture-of-Experts (MoE), continues Mistral's strategy of pushing the boundaries of what's possible with open, freely available AI. While proprietary models from OpenAI, Anthropic, and Google still hold the top spots on some benchmarks, Mistral-Next appears to close the gap significantly, offering performance that rivals GPT-4 while being small enough to run on high-end consumer or prosumer hardware. The release has electrified the open-source AI community and poses a significant challenge to the business models of closed-source AI providers.

Mistral-Next is an MoE model, a sophisticated architecture that is becoming increasingly popular for its efficiency. Instead of a single, monolithic neural network where all parameters are engaged for every token generated, an MoE model consists of multiple "expert" sub-networks and a "router" network. For Mistral-Next 8x22B, this means there are eight distinct 22-billion-parameter expert networks. For any given token of input, the router network intelligently selects which two of the eight experts are best suited to process it. This means that while the model has a total of 176 billion parameters (8×22B), only 44 billion parameters are active during inference for any single token. This clever design allows the model to have a vast amount of embedded knowledge (from its 176B total parameters) while being significantly faster and less computationally expensive to run than a dense model of equivalent size.

Early, informal testing by community members who quickly downloaded and ran the model suggests remarkable capabilities. The model demonstrates strong performance in coding, mathematics, and complex multi-lingual reasoning tasks. Initial reports on community-run benchmarks like the EleutherAI Harness and Chatbot Arena show Mistral-Next outperforming all other open-source models, including its predecessor Mixtral 8x7B and Llama 3 70B, and trading blows with the original GPT-4 on a variety of tasks. A key feature highlighted by Mistral is its massive context window of 128,000 tokens, enabling it to process and reason over entire books or large codebases in a single prompt. This is a critical feature for enterprise use cases like legal document analysis or complex software development.

The strategic implications of this release are multifaceted. First, it reinforces Mistral's position as the undisputed leader in open-source AI. By consistently releasing powerful models without the usage restrictions common to proprietary systems, Mistral empowers a global community of developers, researchers, and startups to build on their work. This fosters an ecosystem that can innovate much faster than any single company. Second, it places immense pressure on its competitors. OpenAI and Anthropic must now justify the high cost and API-based access to their models when a nearly-as-good alternative is available for free. For businesses, the choice between paying per-token for a closed model versus hosting an open model like Mistral-Next on their own infrastructure becomes a critical strategic and economic decision.

Arthur Mensch, CEO of Mistral AI, posted a brief statement following the release: "Innovation thrives in the open. Mistral-Next is our latest contribution to the community that builds alongside us. The best and most creative applications of AI will come from everywhere, not from a handful of centralized labs. We build the engines; you are the drivers." This philosophy resonates deeply with the open-source ethos and has helped Mistral attract top talent and significant investment, despite its open-weights approach. Analysts note that Mistral's business model likely relies on providing premium enterprise services, support, and fine-tuning capabilities for companies that want to use their open models but lack the in-house expertise to do so effectively.

Of course, the release is not without its controversies. The unfiltered and unrestricted nature of the model means it can be used for malicious purposes, such as generating misinformation, spam, or malicious code, with greater ease than models guarded by safety filters and API monitoring. This "dual-use" nature of powerful open-source AI remains a central point of debate in the AI safety community. Regulators, in particular, are struggling to find a balance between fostering innovation and mitigating potential harms, and releases like this one force the issue into the spotlight.

The next steps for the AI community are clear: a flurry of activity will now center on fine-tuning, quantizing (compressing), and building applications on top of Mistral-Next. We can expect to see dozens of specialized versions emerge in the coming weeks, tailored for everything from creative writing to medical advice. This release is a powerful reminder that the future of AI is not a single path. While giant, closed models will continue to push the absolute frontier of capability, the open-source movement, championed by players like Mistral, is ensuring that powerful AI remains a decentralized, accessible, and dynamic technology for all.

US Commerce Department and NIST Finalize Mandatory Reporting Rules for High-Compute AI Models

US Commerce Department and NIST Finalize Mandatory Reporting Rules for High-Compute AI Models

The U.S. Department of Commerce, in coordination with the National Institute of Standards and Technology (NIST), on August 7, 2025, issued its final rule mandating reporting requirements for the development and training of powerful artificial intelligence models. This regulation, which stems from President Biden's 2023 Executive Order on AI, establishes specific compute thresholds that, when crossed, will require AI developers to report their activities, safety testing procedures, and model capabilities to the federal government. The move marks the most significant concrete step by the U.S. government to date to bring oversight to the AI frontier, creating a formal mechanism for monitoring potential national security risks and other large-scale societal dangers posed by next-generation models.

The final rule establishes a clear, tiered system for reporting. Any AI model trained using a quantity of computing power greater than 1026 floating point operations (FLOPs) is now subject to mandatory reporting. This threshold is designed to capture the largest and most capable foundation models, such as those developed by OpenAI, Google DeepMind, Anthropic, and other leading labs. The reporting must occur before the training run begins and must include details about the planned computational resources, the dataset composition (including safeguards for copyrighted and personal information), and the security measures in place to prevent the theft of the model's weights. Once training is complete, a post-training report is required, which must detail the results of extensive safety testing, often referred to as "red-teaming," conducted to identify potentially hazardous capabilities.

A key and heavily debated component of the final rule is the definition of "hazardous capabilities." According to the document, this includes, but is not limited to, the ability to assist in the design of chemical, biological, radiological, or nuclear (CBRN) weapons; the capacity to autonomously execute cyberattacks; the ability to engage in deceptive self-preservation behaviors; or the power to be used for mass persuasion or manipulation in ways that could destabilize democratic processes. NIST's AI Safety Institute is tasked with developing the standardized testing protocols for these capabilities. Companies must submit the results of these standardized tests, and the Institute will have the authority to conduct independent evaluations if a model is deemed to be of particularly high risk.

The reaction from the industry has been bifurcated. Major AI labs like OpenAI and Anthropic, which had previously made voluntary safety commitments, publicly welcomed the regulation as a necessary step for responsible stewardship of the technology. In a statement, Anthropic's CEO Dario Amodei said, "Clear rules of the road are essential for building public trust and ensuring that the development of AGI proceeds safely. We have been actively collaborating with NIST and the Commerce Department and believe this rule strikes a reasonable balance." However, other players, particularly those in the open-source community and venture capitalists, have expressed concerns that the regulation could stifle innovation and cement the dominance of incumbent players.

A prominent venture capitalist, speaking on condition of anonymity, argued, "The compliance burden associated with this rule is immense. It requires a level of legal and technical overhead that a startup can't easily afford. This will create a chilling effect on ambitious projects and may push cutting-edge research to other countries with more permissive regulatory environments. The 1026 FLOP threshold seems high now, but with algorithmic efficiency improving, it could soon capture far more than just the frontier models." The rule also has significant implications for cloud computing providers like Amazon Web Services, Microsoft Azure, and Google Cloud, who will now be required to track and report on large-scale training runs conducted on their platforms, effectively making them enforcers of the new regulation.

The international dimension is also critical. U.S. officials stated that they are working closely with allies, particularly in the European Union and the United Kingdom, to harmonize reporting standards. The goal is to create a global baseline for AI oversight to prevent a "race to the bottom" where companies develop dangerous AI in jurisdictions with lax regulations. This regulatory framework sets a precedent that other nations will likely watch closely, and potentially emulate, as they formulate their own approaches to governing AI.

The immediate next step is the implementation phase. The Department of Commerce will establish a new Office for AI Safety and Reporting to receive and analyze the submitted information. NIST will expedite the release of its first version of the standardized testing framework. For AI developers, the race is on to build internal compliance teams and integrate the new safety testing and reporting requirements into their MLOps pipelines. This regulation fundamentally alters the calculus of building frontier AI models in the United States, transforming it from a purely technical and commercial endeavor into a highly regulated activity with significant national security implications.

AetherAI Unveils Chronos-1, a Novel 'Temporal State Model' Aiming to Dethrone the Transformer

AetherAI Unveils Chronos-1, a Novel 'Temporal State Model' Aiming to Dethrone the Transformer

A San Francisco-based startup named AetherAI has emerged from stealth mode with the publication of a paper on arXiv detailing "Chronos-1," a new AI architecture it calls a "Temporal State Model" (TSM). The paper, released on August 6, 2025, makes the bold claim that Chronos-1 can outperform the dominant transformer architecture on a wide range of tasks involving long-sequence time-series data, while being significantly more computationally efficient. If the claims hold up under scrutiny, Chronos-1 could represent the first major architectural shift away from transformers for a specific but critical domain of AI, with massive implications for fields like finance, climate modeling, logistics, and healthcare monitoring.

For the last several years, the transformer architecture, with its self-attention mechanism, has been the undisputed king of AI. However, its core strength is also its greatest weakness. The self-attention mechanism, which allows every token in a sequence to attend to every other token, has a computational and memory complexity that scales quadratically with the sequence length, denoted as O(n2). This makes processing very long sequences—such as years of stock market data, high-frequency sensor readings, or long-term climate data—prohibitively expensive. AetherAI's Chronos-1 is designed to solve this exact problem. It eschews self-attention entirely, instead adopting an architecture inspired by State Space Models (SSMs) like Mamba, but with a novel twist.

The core of Chronos-1 is a continuous-time state update mechanism. Instead of processing discrete tokens like a transformer, the TSM conceptualizes data as a continuous signal evolving over time. The model maintains an internal state vector, s(t), which is updated using a learned differential equation: dtds(t)=f(s(t),x(t);Θ). Here, x(t) is the input at time t, and f is a complex neural network parameterized by weights Θ. This continuous formulation allows the model to naturally handle irregularly sampled data—a common problem in real-world scenarios like medical records or industrial sensor logs—where measurements are not taken at perfectly regular intervals. The architecture reportedly scales linearly with sequence length, O(n), allowing it to process sequences that are orders of magnitude longer than what is practical for transformers.

The AetherAI paper presents a compelling set of benchmark results. On the "Long Range Arena" benchmark suite, designed to test model performance on long-context tasks, Chronos-1 reportedly outperforms all existing transformer and SSM-based models. In a financial forecasting task using tick-by-tick stock data over a full year, the model allegedly achieved a 20% lower mean squared error than the best-performing transformer variant. Similarly, in a climate modeling experiment predicting El Niño-Southern Oscillation patterns from decades of ocean temperature data, the TSM demonstrated a superior ability to capture long-range dependencies that are crucial for accurate long-term forecasts.

The founders of AetherAI, a team of former researchers from Google Brain and Stanford's AI Lab, argue that the transformer was fundamentally designed for the static, discrete world of natural language, not the dynamic, continuous nature of time itself. "We are trying to fit the square peg of self-attention into the round hole of temporal dynamics," said CEO Dr. Lena Petrova in an interview. "The world is not a sequence of discrete tokens; it's a continuous flow of events. Chronos-1 is built on this principle from the ground up. It 'remembers' the past not by looking back at every single past event, but by compressing the entire history into its present state, much like a physical system."

The initial reaction from the AI research community is one of cautious optimism. Professor Julian Evans of MIT's CSAIL commented, "The state-space approach is very promising, and the results in the paper are impressive. The key challenge for these architectures has always been their ability to perform content-based reasoning, something at which attention excels. AetherAI's claims of high performance on complex, heterogeneous data will need to be independently replicated. But if they are right, this is a very big deal." The implications for hardware are also significant. Because Chronos-1's architecture is less memory-intensive and more parallelizable in a different way than transformers, it may favor different hardware designs, potentially opening the door for new types of AI accelerators beyond the GPU.

AetherAI has announced that it will release a small, open-source version of Chronos-1 for researchers to validate, while keeping its larger, proprietary models for enterprise partnerships. The startup is targeting initial applications in high-frequency trading, supply chain optimization for large retailers, and predictive maintenance for industrial machinery.

The unveiling of Chronos-1 is a powerful reminder that despite the transformer's current dominance, the book on AI architectures is far from closed. The future of AI will likely not be a single, monolithic architecture but a diverse ecosystem of specialized models tailored to the unique structure of the problems they are meant to solve. Chronos-1 may be the first successful step in building a new class of models designed specifically for the fourth dimension: time.