- ChatGPT Toolbox's Newsletter

- Posts

- AI Pulse

AI Pulse

🤫 AI Pulse - the AI insight everyone will be talking about (you get it first).

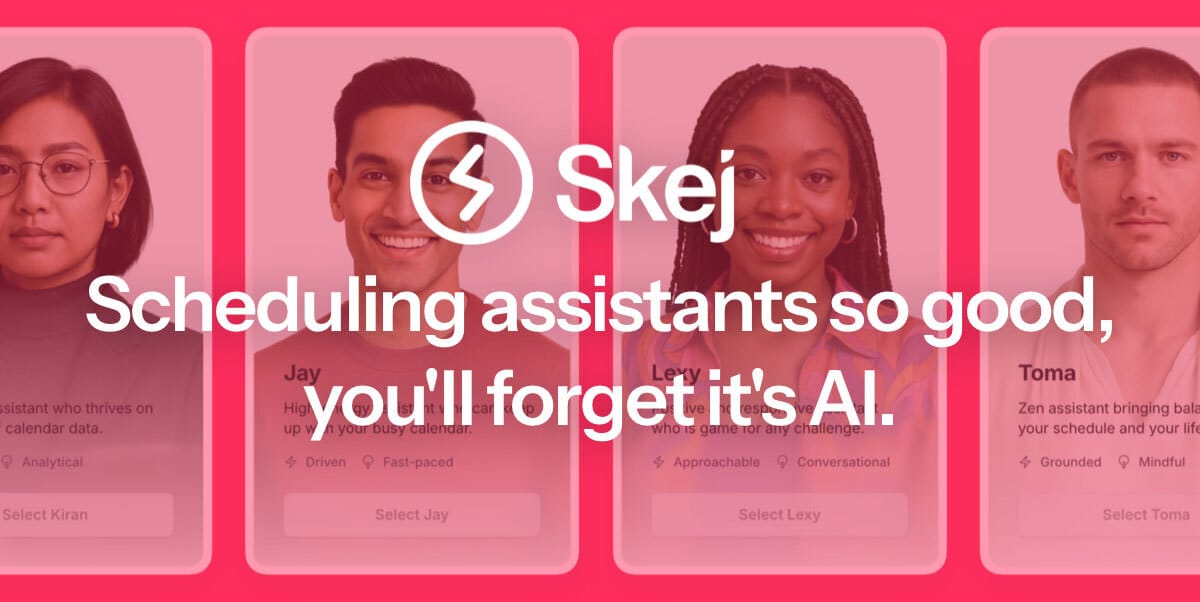

An AI scheduling assistant that lives up to the hype.

Skej is an AI scheduling assistant that works just like a human. You can CC Skej on any email, and watch it book all your meetings. It also handles scheduling, rescheduling, and event reminders.

Imagine life with a 24/7 assistant who responds so naturally, you’ll forget it’s AI.

Smart Scheduling

Skej handles time zones and can scan booking linksCustomizable

Create assistants with their own names and personalities.Flexible

Connect to multiple calendars and email addresses.Works Everywhere

Write to Skej on email, text, WhatsApp, and Slack.

Whether you’re scheduling a quick team call or coordinating a sales pitch across the globe, Skej gets it done fast and effortlessly. You’ll never want to schedule a meeting yourself, ever again.

The best part? You can try Skej for free right now.

European Consortium Releases Aethelred-1, a 70B Parameter Open-Source LLM Focused on Linguistic Diversity and Data Privacy

European Consortium Releases Aethelred-1, a 70B Parameter Open-Source LLM Focused on Linguistic Diversity and Data Privacy

A European research consortium, "EuroAI," yesterday unveiled Aethelred-1, a powerful 70-billion parameter open-source large language model. Based in Paris and Berlin, the project aims to counter the dominance of US-based models by prioritizing Europe's 24 official languages and adhering to strict GDPR principles from the ground up. This release, announced on Monday, August 11, 2025, challenges the current AI landscape by offering a transparent, auditable, and privacy-centric alternative for researchers and businesses.

The release of Aethelred-1 represents a significant geopolitical and technological milestone in the global AI race. Developed over two years with funding from a mix of public and private European entities, the model is explicitly designed to be a "sovereign" alternative to the models emerging from Silicon Valley. The core technical innovation lies not just in its performance, which is reported to be competitive with models like GPT-4 and Claude 3, but in its architecture and data curation, which were guided by European legal and ethical frameworks, most notably the GDPR and the recently implemented EU AI Act.

Technically, Aethelred-1 is a Mixture-of-Experts (MoE) model. It features a total of 70 billion parameters, but they are distributed across eight specialist "expert" networks. During inference, for any given token, the model's routing network dynamically selects only two of these experts to process the information. This sparse activation means that the computational cost for running the model is equivalent to that of a much smaller, 17-billion parameter dense model. This makes Aethelred-1 remarkably efficient, allowing it to be self-hosted by medium-sized enterprises without requiring colossal computing clusters, a key factor for data sovereignty.

The most distinguishing feature, however, is its training dataset, dubbed "EuroCorpus." Unlike the often opaque, web-scraped datasets used by many of its predecessors, EuroCorpus is a meticulously curated collection of text and code. The consortium reports that over 60% of the data is non-English, with high-quality, balanced representation for all 24 official EU languages. This was achieved through partnerships with national libraries, public broadcasters, and academic institutions across the continent. Furthermore, the entire dataset was filtered using advanced PII (Personally Identifiable Information) redaction tools and underwent a rigorous copyright audit to ensure full compliance with EU law, a direct response to the numerous lawsuits plaguing other AI labs.

Dr. Hélène Dubois, the lead research scientist on the project from Inria in Paris, commented on the release: "Our goal was not just to build a European model, but to build a model on European values. Transparency, privacy, and multilingualism are not afterthoughts or add-ons; they are embedded in the model's architecture. Researchers can inspect the dataset, audit the training logs, and understand the model's 'constitutional' principles, which are directly derived from EU charters on fundamental rights."

The implications are far-reaching. For European businesses, particularly in sensitive sectors like finance and healthcare, Aethelred-1 offers a path to leveraging state-of-the-art AI without sending potentially sensitive data to servers outside the EU. For the research community, the model's transparency provides an invaluable resource for studying AI safety, bias, and alignment. Early reviews from beta testers have praised the model's nuanced understanding of cultural contexts in languages other than English, a common weakness in US-centric models. For instance, it has demonstrated a sophisticated grasp of idioms and legal concepts specific to countries like Poland and Greece.

Naturally, the release has generated a competitive response. Some experts suggest that while its GDPR-native status is a major advantage in Europe, its performance on highly technical English-language tasks, such as complex code generation, may still lag slightly behind the very latest proprietary models. However, the open-source nature of Aethelred-1 means a global community of developers will now be able to fine-tune and improve it, potentially closing that gap quickly.

The release of Aethelred-1 is more than a technical achievement; it's a statement of intent. It demonstrates a viable path for developing powerful AI that aligns with specific regional values and legal frameworks. The focus now shifts to adoption and the ecosystem that will grow around the model. If successful, it could pave the way for other regions to develop their own "sovereign" AIs, creating a more diverse and multipolar global AI landscape.

CERN's New "Symmetry AI" Uncovers a Novel Mathematical Framework Potentially Linking General Relativity and Quantum Mechanics

CERN's New "Symmetry AI" Uncovers a Novel Mathematical Framework Potentially Linking General Relativity and Quantum Mechanics

In a landmark announcement from Geneva, physicists at CERN revealed on Monday that an AI system named "Symmetry AI" has discovered a previously unknown mathematical symmetry. This discovery, published today, August 12, 2025, in the journal Nature Physics, provides a tantalizing new bridge between Einstein's theory of general relativity and the standard model of particle physics. The AI, specifically designed for abstract pattern recognition in theoretical physics, has generated a set of equations that could provide a new, testable pathway towards a unified theory of quantum gravity.

The quest to unite general relativity—the theory of the very large (stars, galaxies, gravity)—and quantum mechanics—the theory of the very small (particles, forces)—has been the holy grail of theoretical physics for nearly a century. The two theories are phenomenally successful in their own domains but are based on incompatible mathematical foundations. This announcement from CERN's theory department suggests that AI may be the key to breaking this impasse. The "Symmetry AI" project was initiated to explore whether advanced machine learning could perceive profound connections in abstract mathematical spaces that are beyond human intuition.

Symmetry AI is not a large language model. It is a highly specialized form of Graph Neural Network (GNN) combined with a symbolic regression engine. The system was trained on the core mathematical structures of both theories. For general relativity, this included the language of differential geometry and tensor calculus. For the Standard Model of particle physics, it was trained on the principles of quantum field theory and group theory, particularly the symmetries described by the Lie group SU(3)×SU(2)×U(1). The AI's objective was not to crunch experimental data, but to explore the space of possible mathematical extensions and unifications, searching for a larger, more elegant parent structure that could contain both theories as special cases.

According to the paper, the AI system, after months of autonomous operation, proposed a novel framework based on an exceptionally complex E8 Lie group, a structure previously explored in string theory but often dismissed as too complex and not yielding testable predictions. The AI's breakthrough was in identifying a new way to "break" this symmetry. It generated a pathway of transformations that, under low-energy conditions, resolves into the separate, familiar mathematics of gravity and particle physics. Crucially, it also predicts the existence of a new particle, a scalar boson with specific properties that are distinct from the Higgs boson, which could potentially be detected in future high-energy experiments.

Dr. Eva Nowak, the head of the theoretical physics group at CERN, stated in a press conference, "We did not ask the AI to find a specific solution. We presented it with the fundamental axioms of our two most successful theories and tasked it with finding mathematical consistency—a shared aesthetic, if you will. The result is astonishing. It's a set of equations that no human physicist we know of has ever written down, yet they are internally consistent and surprisingly elegant. It has given us a new map to a place we didn't even know existed."

The implications are profound, not only for physics but for the scientific method itself. This represents a shift from AI as a tool for data analysis to AI as a creative partner in pure theory. The AI was not merely fitting curves to data points; it was generating novel, abstract hypotheses. Skepticism, of course, remains. The AI's output is a mathematical proposal, not a proven physical reality. The real work for human physicists begins now: to rigorously analyze these equations, derive their full physical consequences, and design experiments to test their predictions.

"It’s not that the AI has solved quantum gravity," commented a leading physicist from Caltech, who was not involved in the study. "What it has done is crack open a door that we had all been pushing against for decades. It has provided a concrete, mathematically-sound research program that could occupy physicists for the next 20 years. Now, we have to walk through that door and see what's really there."

The next steps involve simulating the conditions predicted by the AI's equations and searching for the signature of its proposed new particle in existing and future data from the Large Hadron Collider. If this AI-generated theory holds even a kernel of truth, it will mark a turning point in our understanding of the universe and in our methods of discovering that understanding.

ChronoAI Releases "Temporal Diffusion Weaving," A New Architecture for Long-Form, Coherent Video Generation

ChronoAI Releases "Temporal Diffusion Weaving," A New Architecture for Long-Form, Coherent Video Generation

The AI startup ChronoAI yesterday released a research paper and a set of stunning demonstration videos for its new generative video model, which uses a novel architecture called "Temporal Diffusion Weaving" (TDW). The San Francisco-based company claims its model can generate videos up to five minutes in length with unprecedented temporal consistency, meaning objects, characters, and environments remain stable and coherent throughout the entire duration. This breakthrough, announced August 11, 2025, directly addresses the primary weakness of previous video generation models, which often suffer from flickering, object warping, and loss of identity over more than a few seconds.

Generative video has been a major focus for AI labs, but progress has been hampered by the challenge of long-term consistency. While models can produce impressive short clips, the accumulation of tiny errors from one frame to the next quickly leads to a complete breakdown in realism for longer sequences. Characters might change clothes, objects might appear or disappear, and lighting can shift unnaturally. ChronoAI's TDW architecture tackles this problem by fundamentally rethinking how video is synthesized.

Standard video diffusion models typically operate frame-by-frame or in small batches of frames, using the previous frame(s) to predict the next one. This autoregressive process is what leads to error accumulation. Temporal Diffusion Weaving, by contrast, works on a global video structure. First, it uses a large language model to interpret the user's prompt and create a "storyboard" of key scenes and character states over the full duration of the requested video. This storyboard acts as a set of high-level constraints. It then creates a low-resolution "scaffolding" video for the entire duration, which maps out object positions, camera motion, and basic lighting.

The "weaving" process is the core innovation. Instead of generating frames sequentially, the TDW model works on the entire video simultaneously, but at different temporal frequencies. It refines the global motion and a character's overall appearance across the full five minutes in one pass, and then in subsequent passes, it "weaves" in finer details at shorter time scales—like the movement of cloth, facial expressions, and environmental textures. This is analogous to how a painter might first sketch out the entire composition, then block in large areas of color, and only at the end add the fine brushstrokes. The process is governed by a temporal attention mechanism that allows each frame to "see" all other frames, ensuring that, for example, a character's shirt at minute four is identical to the one worn at second ten.

"We realized the frame-by-frame approach was a dead end for long-form content," said Maya Patel, CEO and co-founder of ChronoAI. "You can't create a coherent movie by only looking at the last half-second. Our model thinks like a film director—it plans the whole arc, blocks the scenes, and then fills in the details, ensuring everything serves the global narrative. The consistency isn't an afterthought; it's the foundation of the generation process."

The demonstration videos are striking. One shows a character walking through a bustling fantasy city for three minutes, interacting with various elements, with their appearance and the city's architecture remaining perfectly stable. Another shows a detailed product demonstration with consistent branding and object physics. The implications for the entertainment, marketing, and education industries are enormous. This technology could drastically lower the cost of producing animated content, special effects, and marketing materials.

However, the advance also raises significant ethical concerns. The ability to create long, coherent, and realistic videos of people or events that never happened is a powerful tool for misinformation. Experts in AI safety have immediately called for robust, non-removable watermarking and provenance tracking for any content generated by such models. ChronoAI has stated it is implementing the C2PA standard for content provenance but acknowledges that more safeguards will be necessary as the technology evolves. The computational requirements for TDW are also immense, likely restricting its use to large, well-funded organizations for the time being.

ChronoAI has not yet released the model publicly, opting for a staged rollout through industry partners to better understand its capabilities and risks. This breakthrough sets a new benchmark in the field of generative video. The focus of competitors will now inevitably shift from simply generating short, high-fidelity clips to solving the grand challenge of long-term temporal coherence.

Global Leaders Sign "Geneva Accords on AI Proliferation" Establishing First International AI Safety Body

Global Leaders Sign "Geneva Accords on AI Proliferation" Establishing First International AI Safety Body

In a historic summit in Geneva, leaders and representatives from 45 nations, including the United States, China, the United Kingdom, and the European Union, signed the "Geneva Accords on AI Proliferation" on Tuesday, August 12, 2025. The landmark treaty establishes the first-ever international regulatory body for artificial intelligence, the International AI Safety Organization (IASO), and sets binding technical standards for the development and deployment of highly capable "frontier" AI models. This agreement moves beyond the general principles of previous AI summits, creating concrete, verifiable obligations for AI developers worldwide.

The signing of the Accords is the culmination of over a year of tense negotiations following a series of high-profile AI safety incidents and warnings from the scientific community about the potential risks of unconstrained AI development. The treaty's central purpose is to prevent a "race to the bottom" in AI safety and to establish a global framework for managing the proliferation of models that possess capabilities with potential for large-scale misuse or unpredictable behavior.

The treaty's core technical provisions are what set it apart. First, it mandates the universal adoption of the C2PA (Coalition for Content Provenance and Authenticity) standard for all publicly available generative AI models developed within signatory nations. This creates a cryptographic chain of custody for AI-generated content, making it easier to distinguish authentic media from synthetic deepfakes. Second, it establishes a tiered system for AI models based on their computational training cost, measured in Floating Point Operations (FLOPs), and their demonstrated capabilities in benchmark tests. Models exceeding a certain threshold (defined in the treaty's annex) are designated as "frontier systems" and are subject to stringent pre-deployment requirements.

These requirements include mandatory third-party audits conducted by accredited auditors under the supervision of the newly formed IASO. These audits will involve extensive red-teaming to probe for dangerous capabilities, such as autonomous replication, cybersecurity vulnerabilities, and potential for manipulation of critical infrastructure. The results of these audits must be submitted to the IASO before a model can be widely deployed. The treaty also includes provisions for secure "model-sharing" protocols, allowing international safety teams to inspect the weights and architectures of frontier models under controlled conditions.

"Today, we are choosing cooperation over chaos," stated the UN Secretary-General at the signing ceremony. "The Geneva Accords recognize that the most powerful AI systems are a shared global resource and a shared global responsibility. The IASO will not be a toothless watchdog; it will be a technically proficient organization with the authority to inspect, to verify, and to recommend sanctions for non-compliance."

The creation of the IASO, to be headquartered in Geneva, is perhaps the most significant outcome. It will be staffed by a combination of AI researchers, cybersecurity experts, and policy specialists from member nations. Its mandate includes setting and updating technical safety standards, accrediting auditors, and serving as a global clearinghouse for AI safety incidents. The inclusion of both the US and China, the world's two leading AI powers, is seen as a major diplomatic victory, though experts caution that enforcement and trust will be ongoing challenges.

The AI industry has responded with cautious optimism. Major labs like OpenAI, Google DeepMind, and Anthropic, who have long called for some form of regulation, issued statements supporting the Accords. They see it as a way to level the playing field and ensure that all major players adhere to the same high safety standards. However, some open-source advocates and smaller startups have expressed concern that the high cost of compliance with IASO audits could stifle innovation and entrench the market dominance of large, incumbent players. The treaty attempts to address this with a fund to help smaller entities cover the cost of safety audits.

The Geneva Accords are not a final solution but a foundational framework. The immediate next steps involve the monumental task of staffing the IASO and finalizing the granular technical specifications for the auditing process. The success of this treaty will depend on the willingness of signatory nations to enforce its rules and the ability of the IASO to adapt to the breathtakingly fast pace of AI innovation.

MIT Researchers Unveil "SynapseFlow" Neuromorphic Chip Running a 1-Billion Parameter LLM on Millwatts of Power

MIT Researchers Unveil "SynapseFlow" Neuromorphic Chip Running a 1-Billion Parameter LLM on Millwatts of Power

Researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) have demonstrated a new neuromorphic chip, named "SynapseFlow," capable of running a 1-billion parameter large language model using only milliwatts of power. A paper detailing the chip's architecture and performance, published yesterday, August 11, 2025, shows it is over 100 times more energy-efficient than current mobile NPUs (Neural Processing Units). This breakthrough could unlock the ability to run complex AI models directly on small, battery-powered devices like smartphones, wearables, and sensors, eliminating the need for a constant cloud connection.

The advancement tackles one of the biggest bottlenecks in deploying advanced AI: energy consumption. While models have become incredibly powerful, they require vast amounts of energy, typically handled by power-hungry GPUs in data centers. Edge AI has been limited to much smaller, less capable models. The SynapseFlow chip represents a fundamental paradigm shift away from the traditional von Neumann architecture used in virtually all modern computers, where processing and memory are physically separate. This separation creates an "energy bottleneck" as data must be constantly shuttled back and forth.

SynapseFlow is a true neuromorphic, or "brain-inspired," design. It employs an analog, in-memory computing architecture. In this design, the mathematical operations required for AI inference—primarily matrix multiplications—are performed directly within the memory cells themselves. The chip uses resistive random-access memory (RRAM) to store the neural network's weights. The physical property of resistance in these cells is used to directly represent the model's parameters. When a voltage (representing input data) is applied, the resulting current, calculated via Ohm's law (I=V/R), instantly produces the result of a multiplication. This eliminates the data movement bottleneck and performs computation at the speed of physics with minimal energy loss.

Furthermore, the chip operates using Spiking Neural Networks (SNNs). Unlike traditional Artificial Neural Networks (ANNs) that process continuous numerical values in every cycle, SNNs are event-driven. Neurons only "fire" and consume energy when they receive a significant input spike, similar to biological neurons. This asynchronous, sparse processing means that large parts of the chip are inactive at any given moment, drastically reducing static power consumption.

"We've moved from mimicking the brain's function in software to mimicking its structure and efficiency in silicon," explained Dr. Kenji Tanaka, the lead author of the study. "The SynapseFlow chip doesn't just calculate faster; it calculates smarter. By only activating the necessary circuits in response to incoming data 'spikes,' we achieve power efficiency that is orders of magnitude better than what's possible with digital designs."

In their demonstration, the MIT team ran a specialized, 1-billion parameter language model that had been converted to an SNN format on the SynapseFlow chip. The chip successfully performed complex tasks like summarization and sentiment analysis while consuming less than 50 milliwatts of power, a level suitable for a smartwatch battery. This is a stark contrast to the tens or hundreds of watts required to run similar-sized models on conventional hardware.

The implications for personal technology and the Internet of Things (IoT) are staggering. Imagine a smart assistant on your phone that is entirely private because it never sends your conversations to the cloud. Consider medical sensors that can perform complex, real-time analysis of biometric data locally, or autonomous drones that can process rich visual information without draining their batteries. This technology enables a future of truly intelligent, autonomous, and private edge devices.

The path to commercialization still has challenges. Manufacturing analog chips at scale is notoriously difficult, and programming for event-driven, asynchronous hardware requires a completely new software stack. However, the SynapseFlow prototype provides a compelling proof-of-concept. The next stage of research will focus on scaling the design, improving manufacturing yield, and developing the software tools needed for developers to harness this new class of processor. This breakthrough signals that the future of AI may not just be in the cloud, but all around us, running silently and efficiently on the devices we use every day.